The Enlightenment Foundation Libraries has several bindings for other languages in order to ease the creation of end-user applications, speeding up its development. Among them, there’s a binding for Javascript using the Spidermonkey engine. The questions are: is it fast enough? Does it slowdown your application? Is Spidermonkey the best JS engine to be used?

To answer these questions Gustavo Barbiericreated some C, JS and Python benchmarks to compare the performance of EFL using each of these languages. The JS benchmarks were using Spidermonkey as the engine since elixir was already done for EFL. I then created new engines (with only the necessary functions) to also compare to other well-known JS engines: V8 from Google and JSC (or nitro) from WebKit.

Libraries setup

For all benchmarks EFL revision 58186 was used. Following the setup of each engine:

- Spidermonkey: I’ve used version 1.8.1-rc1 with the already available bindings on EFL repository, elixir;

- V8: version 3.2.5.1, using a simple binding I created for EFL. I named this binding ev8;

- JSC: WebKit’s sources are needed to compile JSC. I’ve used revision 83063. Compiling with CMake, I chose the EFL port and enabled the option SHARED_CORE in order to have a separated library for Javascript;

Benchmarks

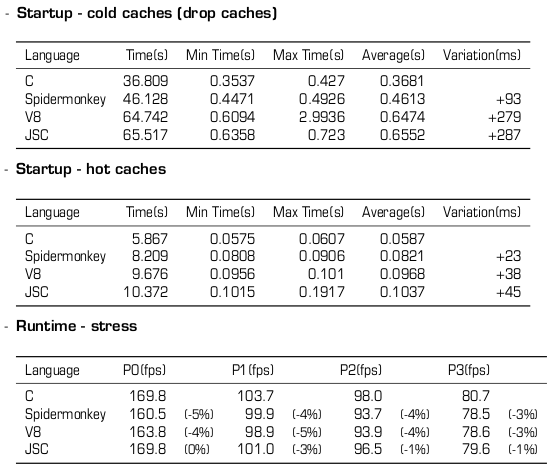

Startup time: This benchmark measures the startup time by executing a simple application that imports evas, ecore, ecore-evas and edje, bring in some symbols and then iterates the main loop once before exiting. I measured the startup time for both hot and cold cache cases. In the former the application is executed several times in sequence and the latter includes a call to drop all caches so we have to load the library again from disk

Runtime - Stress: This benchmark executes as many frames per second as possible of a render-intensive operation. The application is not so heavy, but it does some loops, math and interacts with EFL. Usually a common application would do far less operations every frame because many operations are done in EFL itself, in C, such as list scrolling that is done entirely in elm_genlist. This benchmark is made of 4 phases:

- Phase 0 (P0): Un-scaled blend of the same image 16 times;

- Phase 1 (P1): Same as P0, with additional 50% alpha;

- Phase 2 (P2): Same as P0, with additional red coloring;

- Phase 3 (P3): Same as P0, with additional 50% alpha and red coloring;

The C and Elixir’s versions are available at EFL repository.

Runtime - animation: usually an application doesn’t need “as many FPS as possible”, but instead it would like to limit to a certain amount of frames per second. E.g.: iphone’s browser tries to keep a constant of 60 FPS. This is the value I used on this benchmark. The same application as the previous benchmark is executed, but it tries to keep always the same frame-rate.

Results

The first computer I used to test these benchmarks on was my laptop. It’s a Dell Vostro 1320, Intel Core 2 Duo with 4 GB of RAM and a standard 5400 RPM disk. The results are below.

First thing to notice is there are no results for “Runtime - animation” benchmark. This is because all the engines kept a constant of 60fps and hence there were no interesting results to show. The first benchmark shows that V8’s startup time is the shortest one when considering we have to load the application and libraries from disk. JSC was the slowest and Spidermonkey was in between.

With hot caches, however, we have another complete different scenario, with JSC being almost as fast as the native C application. Following, V8 with a delay a bit larger and Spidermonkey as the slowest one.

The runtime-stress benchmark shows that all the engines are performing well when there’s some considerable load in the application, i.e. removing P0 from from this scenario. JSC was always at the same speed of native code; Spidermonkey and V8 had an impact only when considering P0 alone.

Next computer to consider in order to execute these benchmarks was a Pandaboard, so we can see how well the engines are performing in an embedded platform. Pandaboard has an ARM Cortex-A9 processor with 1GB of RAM and the partition containing the benchmarks is in an external flash storage drive. Following the results for each benchmark:

Once again, runtime-animation is not shown since it had the same results for all engines. For the startup tests, now Spidermonkey was much faster than the others, followed by V8 and JSC in both hot and cold caches. In runtime-stress benchmark, all the engines performed well, as in the first computer, but now JSC was the clear winner.

There are several points to be considered when choosing an engine to be use as a binding for a library such as EFL. The raw performance and startup time seems to be very near to the ones achieved with native code. Recently there were some discussions in EFL mailing list regarding which engine to choose, so I think it would be good to share these numbers above. It’s also important to notice that these bindings have a similar approach of elixir, mapping each function call in Javascript to the correspondent native function. I made this to be fair in the comparison among them, but depending on the use-case it’d be good to have a JS binding similar to what python’s did, embedding the function call in real python objects.